Modern advancements in artificial intelligence have sparked debates about the true nature of large language models. Many wonder whether these sophisticated tools represent merely scaled-up versions of traditional neural architectures. To unravel this, we must examine their foundational design and operational mechanics.

At their core, LLMs rely on transformer-based frameworks – a revolutionary approach enabling machines to interpret linguistic patterns with remarkable accuracy. Unlike earlier systems, these models employ attention mechanisms to prioritise contextual relationships within data sequences. This specialisation allows them to process complex language structures efficiently.

The scale of parameters within modern large language models sets them apart from conventional neural configurations. With billions of adjustable weights, they develop nuanced representations of human language through extensive training on diverse datasets. Such capacity facilitates tasks ranging from text generation to semantic analysis.

While sharing DNA with basic neural principles, LLMs demonstrate unique characteristics that redefine their role in artificial intelligence. This exploration clarifies how transformer-driven architectures and specialised training methods elevate their capabilities beyond standard neural approaches.

Introduction to Large Language Models and Neural Networks

Generative technologies now reshape how businesses approach problem-solving, merging computational power with human-like adaptability. A Forrester survey reveals 83% of major North American firms actively test these tools, signalling a paradigm shift in enterprise strategies.

From Predictive Engines to Context-Aware Partners

Early machine learning frameworks focused on pattern recognition within narrow parameters. Today’s advanced architectures process linguistic subtleties through layered decision-making structures. This progression enables tasks like sentiment interpretation and cross-language translation at industrial scales.

Strategic Value in Commercial Operations

Organisations leverage these technologies to automate customer interactions, draft legal documents, and analyse market trends. One financial institution reduced research hours by 40% using AI-driven data synthesis. However, effective deployment requires balancing innovation with ethical governance frameworks.

Commercial adoption demands technical expertise and risk management protocols. Teams must evaluate infrastructure needs alongside workforce training programmes to maximise returns from AI investments.

Defining Large Language Models: The Building Blocks

Modern language processing systems rely on layered frameworks that merge computational scale with linguistic expertise. These frameworks enable machines to interpret and generate text with human-like precision, powering tools from chatbots to research assistants.

Core Concepts and Capabilities

Large language models operate by analysing patterns within vast textual datasets. Through exposure to billions of words, they learn contextual relationships between phrases, symbols, and concepts. This allows them to perform diverse tasks like summarising documents or translating languages.

Pre-training and Fine-tuning Processes

Two critical training phases shape these systems:

- Pre-training: Models digest trillions of words from sources like Wikipedia, building general language understanding through unsupervised learning

- Fine-tuning: Specialised datasets then refine capabilities for specific applications, such as legal contract analysis or medical report generation

This dual approach combines broad knowledge with task-specific accuracy. For example, a model pre-trained on web content might later adapt to technical manuals for engineering applications. The process ensures adaptability across industries while maintaining core linguistic competencies.

What Are Neural Networks? Insights into the Architecture

At the heart of modern artificial intelligence lies a framework inspired by biological cognition. These digital systems process information through interconnected units, forming the backbone of pattern recognition and decision-making technologies. Their design enables machine learning at scales previously unimaginable.

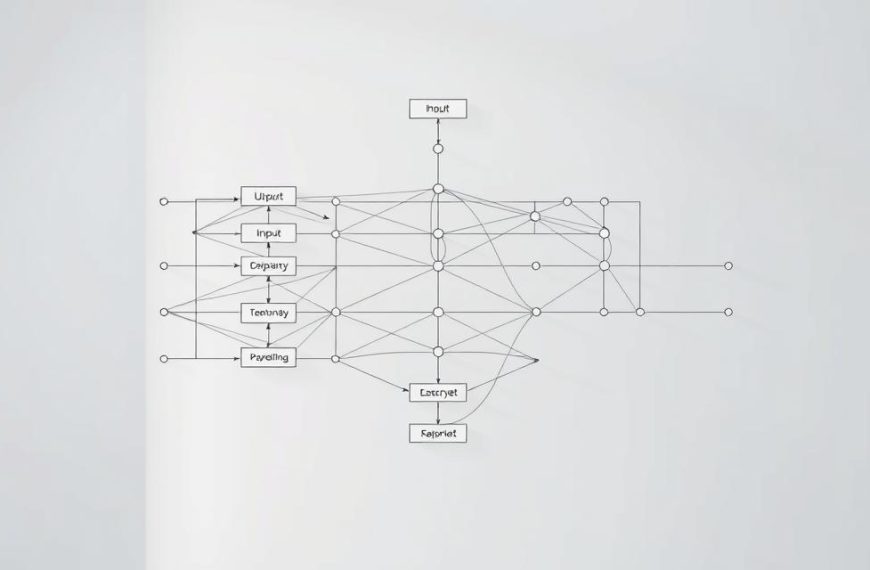

Structure and Components of Neural Networks

Every neural network comprises three primary layers. The input layer receives raw data, whether text pixels or sensor readings. Hidden layers then transform this information through weighted connections and activation functions. Finally, the output layer delivers predictions or classifications.

Each artificial neuron calculates outputs using weighted sums and biases. These components determine how signals propagate through the system. Modern architectures stack dozens of hidden layers, enabling intricate data transformations.

The Learning Process and Parameters

Training involves adjusting connection weights based on errors. Algorithms like backpropagation fine-tune millions of parameters to improve accuracy. This iterative process allows networks to recognise complex patterns in datasets.

Scale plays a critical role in capability. GPT-3, for instance, uses 96 layers and 175 billion parameters to handle linguistic tasks. Such depth enables nuanced understanding while demanding substantial computational resources.

These frameworks demonstrate how simple mathematical units, when massively interconnected, achieve remarkable cognitive feats. Their evolution continues to shape advancements in language processing and predictive analytics.

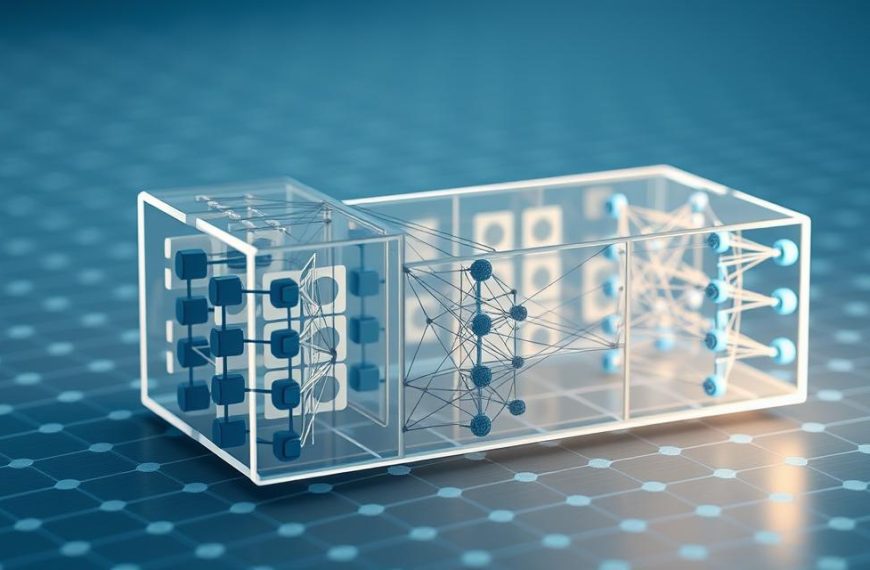

The Transformer Architecture: Revolutionising Natural Language Processing

Transformer architectures have redefined computational linguistics since their 2017 debut. These frameworks introduced a paradigm shift in how machines interpret human communication, prioritising contextual relationships over rigid sequential analysis. Their design addresses critical limitations of earlier systems, particularly in handling long-range dependencies within text.

Understanding Self-Attention Mechanisms

Self-attention forms the backbone of transformer effectiveness. This technique enables simultaneous evaluation of all words in a sequence, mapping connections regardless of positional distance. For example, in the sentence “The bank charges interest on loans but not near the river bank,” the system distinguishes between financial and geographical meanings through contextual analysis.

Parallel Processing and Contextualisation

Traditional models processed language sequentially, creating bottlenecks. Transformers analyse entire documents at once through parallel computation, dramatically accelerating training times. This approach also preserves context across lengthy passages, maintaining thematic coherence in outputs.

| Feature | Transformers | Traditional Models |

|---|---|---|

| Processing Method | Parallel | Sequential |

| Context Handling | Full-document awareness | Limited window |

| Training Speed | Weeks | Months |

| Document Length | 10k+ tokens | 512 tokens |

Google’s groundbreaking research demonstrated these advantages through encoder-decoder structures. Such configurations empower systems to both interpret inputs and generate coherent responses, enabling applications from real-time translation to complex dialogue management.

Processing Text Data with LLMs: From Tokens to Meaning

Modern computational linguistics hinges on converting human communication into machine-readable formats. This transformation involves layered mathematical processes that bridge raw text and contextual understanding. At its core lies the ability to decode linguistic patterns through advanced numerical frameworks.

Word Embeddings and Multi-dimensional Representations

Language models map vocabulary into intricate vector spaces during initial tokenisation processes. Each term becomes coordinates in hyper-dimensional realms – think of “king” positioned closer to “queen” than “car”. These representations capture semantic ties, enabling calculations of similarity between unrelated concepts.

| Aspect | Traditional Methods | Transformer-Based Systems |

|---|---|---|

| Dimensions | 50-300 | 768-12,288 |

| Context Handling | Static | Dynamic |

| Training Data | Millions of words | Trillions of tokens |

Contextualising Language in Neural Models

Vector spaces alone can’t handle phrases like “bass guitar” versus “bass fish”. Advanced systems employ attention layers that weigh surrounding words dynamically. This allows shifting meanings based on sentence structure – crucial for interpreting idioms or technical jargon.

Through continuous training, models develop nuanced representations that mirror human linguistic flexibility. The result? Systems capable of distinguishing “light” as illumination versus weight reduction in varied contexts.

is an llm a neural network: Unpacking the Core Question

Technological debates often blur distinctions between foundational frameworks and their specialised descendants. To clarify: large language models share core principles with conventional computational systems but evolve them for linguistic mastery.

Comparative Analysis of Linguistic and Traditional Frameworks

Standard computational systems excel at pattern recognition in structured data like images or spreadsheets. Language processing demands handling ambiguous relationships between words across extended contexts. Where traditional setups use fixed-layer hierarchies, transformer-based designs employ dynamic attention layers.

Consider email classification versus crafting poetry. Basic systems might sort messages by keywords, while advanced models generate metaphor-rich verses. This difference stems from architectural adaptations enabling contextual fluidity.

Fusion of Learning Methods

Modern language processors combine established machine learning techniques with linguistic innovations. Backpropagation adjusts weights across billions of parameters, while self-attention mechanisms track word dependencies. Training leverages both broad textual exposure and task-specific fine-tuning.

Financial institutions use these hybrid systems to analyse earnings calls. The technology identifies sentiment shifts and technical jargon better than conventional tools. Such precision comes from layered learning approaches unavailable in earlier architectures.

These evolutionary steps demonstrate how deep learning principles adapt to language’s complexities. While rooted in shared mathematical foundations, specialised frameworks achieve what generic networks cannot – human-like textual comprehension.

Real-World Applications of Large Language Models

Businesses across sectors now harness advanced language technologies to solve complex operational challenges. These tools transform raw data into actionable insights, driving efficiency in customer engagement and strategic decision-making.

Use Cases in Sentiment Analysis and Conversational AI

Sentiment analysis enables brands to gauge public opinion at scale. Systems scan social media posts, reviews and surveys, detecting emotional undertones with 90% accuracy. This analysis helps companies adjust marketing strategies in real time.

Conversational AI handles customer queries through chatbots that mimic human dialogue. Banks use these tools to resolve 70% of routine inquiries without staff intervention. Such applications reduce costs while improving service accessibility.

Industry-Specific Implementations

Healthcare researchers employ language models to decode protein interactions in medical literature. One example saw a 30% acceleration in drug discovery timelines through automated data synthesis.

Financial institutions predict market shifts by analysing earnings calls and regulatory filings. Marketing teams automate content generation, producing targeted campaigns that adapt to trending topics. These tasks demonstrate how tailored implementations unlock sector-specific value.