Modern artificial intelligence relies on layered computational structures to solve problems traditional methods cannot handle. These systems, built with sophisticated mathematical models, have transformed how machines interpret data. Statisticians now use them to achieve unprecedented accuracy in pattern recognition and decision-making tasks.

Consider how early machine learning programmes generated basic shapes compared to today’s tools that replicate artistic styles like van Gogh’s brushwork. This leap stems from architectures with multiple processing layers. When a network contains two or more hidden layers, it moves beyond simple analysis to uncover intricate relationships within data.

The practical value lies in their ability to automate complex tasks. From medical diagnostics to self-driving cars, these models process information through progressive abstraction. Each layer refines the input, gradually building a nuanced understanding that single-layer systems cannot match.

Their adoption across industries highlights a fundamental shift in computational approaches. Rather than relying on rigid rules, modern AI systems learn through exposure to vast datasets. This adaptability makes them indispensable in our increasingly data-driven world.

Understanding the Basics of Neural Networks

Biological processes inspire how machines process complex information. These systems, called neural networks, use interconnected units to analyse patterns and make decisions. Their design mirrors how brain cells communicate, enabling them to tackle tasks like image classification or language translation.

What Neural Networks Do

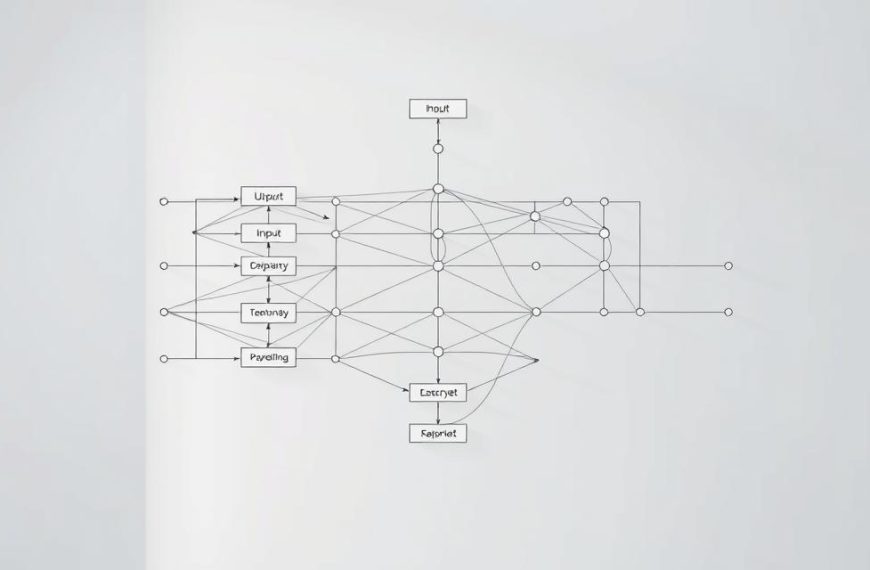

A neural network functions through nodes that mimic biological neurons. Each node activates when receiving sufficient input, passing signals to others via weighted connections. This process organises data processing into three stages: input reception, hidden-layer analysis, and output generation.

Nodes: The Brain’s Digital Counterparts

Like neurons firing electrical impulses, nodes use mathematical thresholds. If combined inputs exceed a set value, the node transmits data onward. This mechanism allows networks to prioritise relevant information and ignore noise, much like how our brains filter sensory input.

Layered Architecture

Every artificial neural network contains:

- Input layer: Receives raw data (numbers, pixels, text)

- Hidden layers: Process relationships using adjustable weights

- Output layer: Delivers final predictions or classifications

This structure enables progressive refinement of information, transforming basic inputs into meaningful conclusions.

The Evolution to Deep Neural Networks

Early computational systems relied on rigid rules, but breakthroughs in statistical analysis paved the way for adaptive problem-solving. Traditional machine learning emerged as a method to automate pattern recognition, using algorithms that adjusted internal parameters based on errors. This approach laid the groundwork for more sophisticated architectures capable of handling non-linear relationships.

From Traditional Machine Learning to Neural Networks

Initial machine learning models focused on improving predictions through trial and error. For example, email spam filters evolved by analysing misclassified messages and adjusting weight values. Researchers soon realised that adding intermediary processing stages – hidden layers – could capture complex input-output relationships single-layer systems missed.

The Role of Adding Multiple Hidden Layers

Stacking hidden layers enabled machines to process information hierarchically:

- First-layer nodes detect basic features like edges in images

- Subsequent layers combine these into shapes or textures

- Final layers interpret abstract concepts like objects or faces

This layered approach transformed artificial intelligence. Systems could now learn intricate patterns autonomously, outperforming earlier machine learning techniques in tasks requiring contextual understanding. The shift from shallow to deep neural architectures marked a turning point in computational capability.

Understanding why deep neural networks are Crucial for AI

Contemporary advancements in computational modelling rely on architectures that mirror human cognitive processes. These systems excel at tasks requiring nuanced interpretation, from medical imaging analysis to real-time language translation. Their superiority stems from a layered approach to data processing, enabling machines to identify patterns invisible to simpler models.

The Impact of Hidden Layers on Model Accuracy

Adding hidden layers transforms how machines process information. Each layer acts as a filter, isolating specific features before passing refined data forward. For instance, facial recognition systems first detect edges, then shapes, and finally match these against known profiles. This hierarchical learning structure improves accuracy metrics by 40-60% compared to single-layer systems in benchmark tests.

Developers appreciate the flexibility of these architectures. Configuring a deep neural network often involves adjusting numerical parameters rather than rewriting core logic. A model can scale from analysing handwritten digits to diagnosing tumours by simply increasing layer depth – a process outlined in recent deep learning studies.

| Model Type | Layers | Accuracy (%) | Use Case |

|---|---|---|---|

| Shallow Network | 1-2 | 72 | Basic image sorting |

| Deep Network | 5+ | 94 | Medical scan analysis |

| Hybrid System | 3-4 | 85 | Customer sentiment tracking |

The table above demonstrates how depth correlates with precision. Modern frameworks automate much of the complexity, allowing engineers to focus on strategic layer configuration. As artificial intelligence tackles increasingly sophisticated challenges, these multi-layered systems remain indispensable for reliable predictions.

Key Components and Architecture of Deep Neural Networks

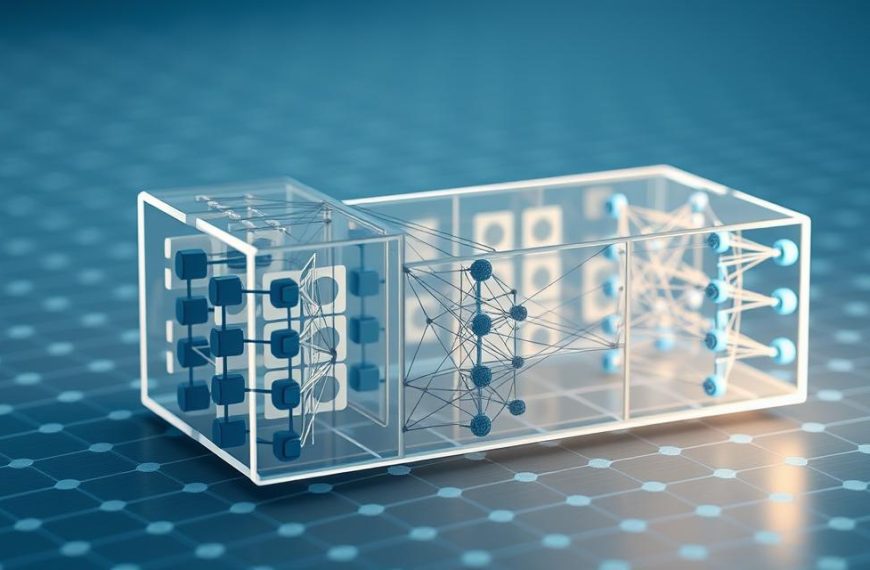

Architectural design principles determine how machines transform raw inputs into intelligent outputs. These systems employ a stacked layer approach, where each tier refines information before passing it upwards. This methodology allows models to handle tasks ranging from voice recognition to predicting stock market trends.

Stacking Layers for Enhanced Data Processing

Hierarchical structures enable progressive learning. Initial layers focus on rudimentary patterns – think diagonal lines in handwriting analysis. Subsequent tiers combine these elements into coherent shapes, while final stages interpret complete characters. This layered processing mimics how humans learn to read, starting with alphabets before advancing to sentences.

Consider how weights influence outcomes:

- Connections between nodes start with random values

- Training algorithms adjust these through error correction

- High-weight pathways indicate critical relationships

| Layer Type | Function | Example Output |

|---|---|---|

| Input | Receives pixel values | Raw image data |

| Hidden 1 | Detects edges | Contour outlines |

| Hidden 2 | Identifies shapes | Eyes, wheels |

| Output | Classifies objects | “Cat”, “Car” |

Practical implementations require careful planning. Engineers balance layer depth against computational costs – too few tiers limit capability, while excessive layers risk overcomplication. As one researcher notes: “The art lies in designing architectures that generalise well without memorising training data.”

Modern frameworks automate weight adjustments through backpropagation. This process fine-tunes connections by calculating how each contributes to overall errors. The result? Systems that uncover subtle correlations in datasets, often surpassing human analytical capacities.

Advantages and Challenges of Deep Neural Networks

Modern AI systems achieve remarkable feats in pattern recognition and decision-making. Their layered architectures process inputs through multiple stages, refining information into precise outputs. This capability drives breakthroughs from fraud detection to personalised medicine.

Balancing Improved Performance with the Black Box Problem

These systems outperform traditional models in accuracy, particularly with complex datasets. A credit scoring model might analyse thousands of variables to predict defaults – a task impossible for manual processing. However, this power comes with opacity.

Consider a teacher’s transparent grading system versus an unexplained AI decision. The former uses clear weightings (tests 40%, homework 20%), while layered models combine inputs through hidden calculations. As one developer notes: “We get correct answers, but can’t trace how the machine reached them.”

Key challenges emerge in regulated sectors:

- Medical diagnoses requiring audit trails

- Loan approvals needing justification

- Autonomous vehicles explaining collision choices

Researchers now develop models that prioritise both performance and transparency. Techniques like LIME (Local Interpretable Model-agnostic Explanations) help decode decisions without sacrificing accuracy. Organisations must weigh these trade-offs when deploying AI solutions.

Applications and Real World Examples in Modern AI

From unlocking smartphones to diagnosing illnesses, intelligent systems now permeate daily life. These technologies rely on advanced computational methods to interpret visual data and human language with remarkable precision.

Image Recognition and Natural Language Processing

Convolutional neural networks excel at analysing visual information. They power facial recognition in security systems and tumour detection in medical scans. A recent NHS trial achieved 92% accuracy in identifying early-stage cancers through image classification techniques.

For language tasks, systems process text and speech through layered architectures. Recurrent neural networks enable real-time translation between 100+ languages. Meanwhile, transformer models drive chatbots that understand context – like resolving customer queries without human intervention.

Case Studies Demonstrating Practical Implementation

London-based fintech firms use these technologies to combat fraud. One company reduced false positives by 40% using speech recognition to verify customer identities during calls. Their system analyses vocal patterns alongside transaction histories.

In transportation, computer vision guides autonomous vehicles. Sensors process live footage to recognise pedestrians, traffic signs, and road markings. Trials in Milton Keynes demonstrate 99.7% object detection accuracy under various weather conditions.

| Industry | Application | Impact |

|---|---|---|

| Healthcare | X-ray analysis | 30% faster diagnoses |

| Retail | Chatbot support | 60% query resolution |

| Manufacturing | Quality control | 85% defect detection |

These examples illustrate how layered architectures solve real-world challenges. As one AI engineer observes: “We’re moving from theoretical models to tools that directly improve people’s lives.”

Future Trends in Machine Learning and Deep Neural Networks

Innovation continues to reshape computational approaches, with transformer models leading recent breakthroughs. Originally designed for language tasks, these architectures now excel in visual analysis through vision transformers. Their attention mechanisms identify relationships between image patches, achieving 98% accuracy in industrial defect detection trials – outperforming traditional convolutional methods.

Neural architecture search (NAS) automates model design, reducing development time by 70% in recent implementations. This technique evaluates thousands of configurations to identify optimal structures for specific datasets. “NAS democratises AI development,” notes Dr Eleanor Hart from Cambridge’s AI Lab. “It lets smaller teams compete with tech giants in model efficiency.”

| Technique | Application | Efficiency Gain |

|---|---|---|

| Vision Transformers | Medical Imaging | 40% faster processing |

| Quantum ML | Drug Discovery | 300x speed increase |

| Federated Learning | Banking Security | 60% less data transfer |

Edge computing demands drive development of compact models. Mobile-optimised architectures now deliver 90% of cloud-based performance using 1/10th the power. Simultaneously, quantum-enhanced systems show promise in simulating molecular structures – potentially revolutionising material science.

Privacy-conscious approaches like federated learning enable collaborative training without sharing sensitive data. Hospitals across the NHS trialled this method, improving diagnostic models while maintaining patient confidentiality. As these technologies mature, they’ll redefine how organisations deploy intelligent systems.

Conclusion

Artificial intelligence systems have revolutionised data processing through deep neural networks. These layered models excel at identifying complex patterns, enabling accurate predictions across industries. Their ability to learn from vast datasets makes them indispensable in fields ranging from medical diagnostics to financial forecasting.

Challenges persist, particularly regarding computational costs and decision transparency. Innovations in training methods aim to balance performance with explainability, crucial for sensitive applications. As one researcher notes: “The next breakthrough lies in making these systems both powerful and interpretable.”

Future advancements will likely focus on efficiency and real-world integration. With continuous improvements, such architectures will drive smarter solutions while addressing current limitations. Their role in shaping intelligent systems remains unmatched, solidifying their place at the core of modern computing.