At the heart of contemporary machine architectures lies a simple yet transformative concept: dimensional transformation. These foundational elements, often utilised in frameworks like PyTorch, enable systems to process and reinterpret data through mathematical operations. Though rooted in classical statistical methods, their adaptation within computational models unlocks capabilities far beyond basic regression tasks.

When examining structures like PyTorch’s nn.Linear, one discovers their dual role. With a single output feature, they mirror traditional regression techniques. However, multiple outputs allow data to be projected into richer spaces, revealing hidden patterns. This flexibility makes them indispensable for tasks ranging from basic predictions to abstract feature extraction.

Their power stems from adjustable parameters—weight matrices and bias vectors—that evolve during training. By stacking these components, practitioners create hierarchical representations. Each step refines the input, gradually building complexity while maintaining computational efficiency.

Mastery of these elements remains critical for designing effective models. Whether handling tabular data or high-dimensional inputs, their role in shaping a system’s learning capacity cannot be overstated. Understanding their mechanics provides the groundwork for advancing into more sophisticated architectures.

Introducing Linear Layers in Neural Networks

Modern computational systems rely on mathematical engines to reshape information flows. These components, central to frameworks like PyTorch, execute operations defined by y = xAT + b. Here, input values (x) undergo systematic adjustments through weights (A) and biases (b), producing transformed outputs.

Definition and Significance

At their core, these elements act as bridges between network segments. By adjusting in_features and out_features, developers control dimensional shifts—turning 10 inputs into 5 outputs, for example. Initial parameters start randomly but refine through training cycles.

Their simplicity masks profound utility. As one researcher notes:

“Without these transformations, systems couldn’t distil patterns from raw numbers.”

This capability underpins tasks from speech recognition to financial forecasting.

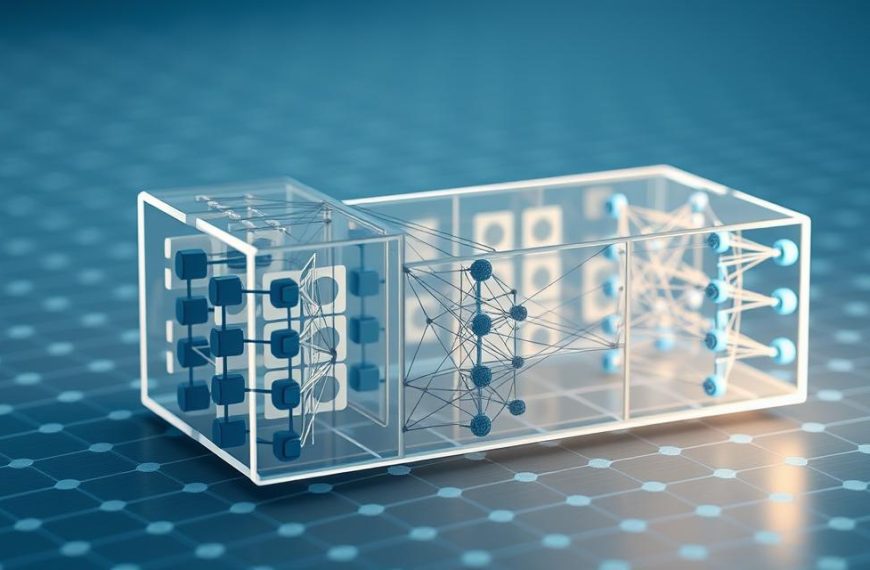

Role in Deep Learning Architectures

Stacking multiple units creates hierarchical processing. Early stages might handle basic feature detection, while subsequent ones build abstract relationships. Despite their straightforward design, these components form the scaffolding for complex models.

PyTorch’s nn.Linear exemplifies this principle. It automatically initialises weight matrices and bias vectors, streamlining development. Such efficiency allows practitioners to focus on architectural creativity rather than manual calculations.

Exploring the Mechanics of a Linear Layer

Central to data transformation within computational models lies a dynamic interplay between structured parameters and algebraic operations. This section unpacks the components enabling systems to reinterpret features through learned relationships.

Understanding the Weight Matrix and Bias Vector

The weight matrix dictates how input elements influence outputs. Sized as out_features × in_features, its values evolve during training to capture patterns. For example, a layer converting 8 inputs to 5 outputs uses a 5×8 matrix.

Bias vectors add flexibility by shifting results. Each output feature receives an adjustable offset, allowing models to fit data beyond rigid origin constraints. Together, these parameters enable precise control over transformation outcomes.

The Process Behind Matrix Multiplication

Core computations involve multiplying input vectors by weight matrices. A 3×2 input multiplied by a 2×4 matrix yields a 3×4 output. This step reshapes data dimensions while preserving batch processing efficiency.

| Input Dimensions | Weight Matrix | Output Shape |

|---|---|---|

| 3×2 | 2×4 | 3×4 |

| 5×10 | 10×7 | 5×7 |

Initialisation strategies, like Xavier or He methods, impact training stability. Properly scaled random starts prevent gradient explosions or vanishing updates. Memory usage grows with matrix sizes, requiring optimisation for large-scale models.

Implementing a Linear Layer using PyTorch

Practical implementation bridges theoretical concepts with real-world applications. Crafting custom components in PyTorch offers insights into how frameworks handle dimensional transformations under the hood. This hands-on approach demystifies parameter management and performance optimisation.

Building a Custom Linear Layer

Creating a bespoke Linear class starts by inheriting from nn.Module. Weights and biases are initialised as nn.Parameter tensors, enabling automatic gradient tracking. For a layer processing 5 inputs into 3 outputs:

class Linear(nn.Module):

def __init__(self, dim_in, dim_out):

super().__init__()

self.weights = nn.Parameter(torch.rand(dim_in, dim_out))

self.bias = nn.Parameter(torch.rand(dim_out))

def forward(self, X):

return X @ self.weights + self.biasThe forward pass uses matrix multiplication, transforming input dimensions efficiently. Random initialisation provides a starting point for training adjustments.

Synchronising with nn.Linear for Accuracy

PyTorch’s native implementation transposes weight matrices compared to custom versions. Matching outputs requires transposing official layer weights before copying:

custom_layer.weights.data.copy_(official_layer.weight.T)

custom_layer.bias.data.copy_(official_layer.bias)Benchmark tests reveal custom code executes in 21.4 µs versus PyTorch’s 23 µs. While marginally faster, built-in layers offer optimised backward passes and hardware acceleration.

Explaining what is linear layer in neural network

Building computational systems often involves balancing custom solutions with established tools. This becomes evident when examining how frameworks like PyTorch handle dimensional transformations compared to manual implementations. Understanding these differences clarifies when to prioritise flexibility over convenience.

Functional Parity Through Weight Alignment

Both approaches produce identical outputs when parameters match. Assertion checks like (l1(X_train[:5]) == l2(X_train[:5])).all() confirm this equivalence. The key distinction lies in weight orientation: custom code uses (input_size, output_size), while PyTorch stores transposed matrices.

Performance tests reveal marginal speed advantages for custom code (21.4µs vs 23µs). However, built-in layers benefit from optimised backward passes and GPU acceleration. Developers must weigh these factors against the educational value of manual implementation.

For those seeking deeper insights, our guide on linear layers explained simply explores these mechanics further. Practical applications often favour PyTorch’s approach for maintainability, while custom builds serve prototyping or specialised scenarios.

Ultimately, the choice depends on project requirements. Both methods demonstrate how fundamental transformations drive learning in modern models. This flexibility enables practitioners to adapt components for specific data processing needs.

Optimising and Troubleshooting Linear Layers

Efficient model development requires mastering both precision and performance tuning. Even well-designed architectures face operational challenges that demand systematic debugging strategies.

Common Errors and Practical Solutions

Dimensional mismatches frequently disrupt workflows. A layer expecting 256 input features will fail if fed 128-dimensional data. PyTorch’s error messages typically highlight these discrepancies, guiding swift resolution through tensor reshaping.

Weight matrix configuration proves equally critical. Initialising a 512×1024 matrix for a layer designed to process 256 features wastes memory and processing power. The torch.nn.init module offers structured approaches, like Xavier uniform for balanced parameter scaling.

Performance and Efficiency Considerations

Memory consumption escalates with layer count and feature dimensions. A 4096×8192 weight matrix consumes 134MB at 32-bit precision – impractical for edge devices. Techniques like quantisation or reduced precision training mitigate this.

Proper initialisation accelerates convergence. Biases set to zero avoid early saturation, while scaled weights maintain stable gradient flow. For convolutional network integration, flatten() operations reshape 3D outputs into 2D tensors compatible with subsequent processing stages.

Extending Applications in Deep Learning Models

Foundational components in machine learning frameworks enable systems to evolve from basic pattern recognition to sophisticated decision-making engines. Their transformative potential becomes evident when examining real-world implementations across architectures.

Utilising Linear Layers in Regression and MLP Models

Multi-layer perceptrons showcase these components’ adaptability. A typical structure combines an input stage, hidden processing units, and final outputs. Each connection employs weighted summations, mathematically expressed as w₁x₁ + w₂x₂ + … + wₘxₘ.

Regression tasks demonstrate simpler implementations. Single-output configurations mirror traditional statistical approaches, while multi-output systems handle complex scenarios like housing price predictions with multiple dependent variables.

Stacking Layers for Complex Feature Transformations

Deep architectures leverage sequential processing for hierarchical learning. Initial stages might identify edges in images, while subsequent ones assemble these into recognisable shapes. This layered approach enables models to distil abstract concepts from raw inputs.

Integration with non-linear functions breaks linear limitations. ReLU or sigmoid operations between processing stages introduce necessary complexity for modelling real-world phenomena.

| Hidden Layers | Neurons per Layer | Training Time Complexity |

|---|---|---|

| 3 | 128 | O(n·m·h) |

| 5 | 512 | O(n·h²) |

Modern frameworks like PyTorch optimise these computations through parallel processing. However, developers must balance depth against resource constraints – deeper networks demand more memory and processing power.

Conclusion

Dimensional transformations form the backbone of modern machine intelligence. Through weight matrices and bias vectors, these components reshape input-output relationships, enabling systems to distil patterns from raw numbers. Their adaptability spans simple regression tasks to multi-layered architectures, proving indispensable across PyTorch’s ecosystem.

Mastering parameter adjustments—like tuning matrix sizes or initialisation strategies—remains vital for efficient model design. Whether deploying pre-built nn.Linear layers or crafting custom solutions, practitioners balance computational efficiency with expressive power. This skill set underpins advanced frameworks, from LSTMs to multi-layer perceptrons.

As foundational elements, they bridge statistical methods and deep learning innovations. Proper integration with activation functions and training data ensures robust feature extraction. For developers, troubleshooting dimensional mismatches or memory constraints becomes intuitive with practice.

Ultimately, these transformations exemplify how simplicity fuels complexity in artificial systems. Their mathematical elegance continues to drive breakthroughs, solidifying their role as essential tools in modern computational toolkits.