For decades, neural networks remained a niche concept in computer science. After initial promise in the late 20th century, progress plateaued as researchers favoured more predictable methods. By 2008, many considered these systems relics of a bygone era – until three pioneers reignited the spark.

Geoffrey Hinton stubbornly championed neural architectures despite widespread doubt. Simultaneously, Jensen Huang foresaw GPUs revolutionising computational capabilities beyond graphics processing. Meanwhile, Fei-Fei Li’s ImageNet project – mocked for its unprecedented scale – became the proving ground for modern machine learning.

This unlikely trifecta catalysed a paradigm shift. Enhanced processing power met vast datasets and refined algorithms, creating fertile ground for innovation. The combination transformed artificial intelligence from theoretical exercise to practical tool.

Today’s applications span healthcare diagnostics to autonomous vehicles, reshaping industries globally. This section explores how years of incremental progress coalesced into a technological watershed moment. We’ll analyse the critical factors enabling neural networks’ dominance in contemporary AI systems.

The journey from academic curiosity to world-changing technology reveals much about modern innovation. Let’s unpack the convergence of ideas that made this transformation possible.

Introduction to the Rapid Rise of Neural Networks

The autumn of 2008 marked a turning point for artificial intelligence research. Computer science departments globally, including Princeton’s graduate programme, had largely abandoned neural architectures in favour of support vector machines. These mathematically elegant models seemed more reliable at the time – until a quiet revolution began.

Three critical developments reshaped the landscape between 2008 and 2012. First, hardware advancements enabled processing of vast datasets that earlier systems couldn’t handle. Second, researchers began prioritising practical results over theoretical purity. Finally, collaborative efforts bridged previously isolated academic disciplines.

Traditional machine learning methods excelled at structured problems but faltered with real-world complexity. Handcrafted features and rigid algorithms struggled with nuances like image variations or speech patterns. Neural architectures, once deemed impractical, demonstrated superior adaptability through layered network structures.

The shift wasn’t immediate. Early sceptics questioned whether these systems could scale effectively. However, benchmark results from competitions like ImageNet silenced doubters, proving neural approaches could outperform human accuracy in specific tasks. This validation triggered a domino effect across research communities.

By 2012, neural networks had become the cornerstone of modern AI development. Their resurgence illustrates how technological progress often depends on convergence – when theoretical potential finally meets enabling infrastructure. This alignment transformed machine learning from academic exercise to industrial powerhouse.

Historical Perspectives and Early Developments

Warren McCulloch’s 1943 declaration – “Nervous activity is essentially binary” – sparked humanity’s first serious attempt to replicate biological cognition. Alongside Walter Pitts, he designed a computational model using electrical circuits to mimic neural interactions. This foundational work laid the blueprint for modern systems, though its implications wouldn’t be fully realised for decades.

Pioneering Research in Artificial Intelligence

The late 1950s saw Frank Rosenblatt’s perceptron generate considerable excitement. This single-layer system could recognise simple patterns, convincing many that machines might soon match human perception. Researchers marvelled at its ability to adjust connections between artificial neurons through trial and error.

Early success proved deceptive. While these networks excelled at linear tasks, real-world problems demanded non-linear solutions. Basic computations involving visual recognition or language translation remained frustratingly out of reach. Systems frequently misinterpreted rotated objects or accented speech.

Early Limitations and Setbacks

Multi-layer architectures emerged as a potential solution during the 1960s. However, training deeper structures required mathematical techniques that hadn’t yet been developed. Marvin Minsky’s influential 1969 critique highlighted these shortcomings, triggering reduced funding and interest.

Two persistent issues plagued researchers:

- Vanishing gradients during weight adjustments

- Insufficient processing power for iterative training

These challenges led to the first AI winter, a period where progress stalled for nearly 15 years. Yet a dedicated cadre continued refining theories, convinced that biological principles held the key to machine intelligence. Their perseverance would eventually bridge the gap between theoretical models and practical implementation.

Key Innovations in Neural Network Architecture

The late 1980s witnessed a mathematical renaissance that redefined machine learning capabilities. Geoffrey Hinton’s collaborative work with David Rumelhart and Ronald Williams introduced a systematic approach to training multi-layered systems through backpropagation. This method enabled precise adjustment of connection weights across successive layers.

Evolution of Backpropagation Techniques

Backpropagation revolutionised how systems learn from examples. The algorithm calculates errors at the output layer, then propagates adjustments backward using calculus’ chain rule. Each iteration makes minor tweaks to weights, gradually improving accuracy through repeated exposure to data.

Three critical factors enabled this breakthrough:

- Efficient gradient computation across hidden layers

- Iterative refinement of decision boundaries

- Scalable error correction mechanisms

Technological Advancements and Scaling Up

Early implementations faced hardware limitations. Modern training processes leverage parallel processing to handle billions of parameters. This scalability transformed theoretical models into practical tools capable of recognising complex patterns.

| Aspect | Pre-1986 Methods | Backpropagation Approach |

|---|---|---|

| Training Depth | Single-layer | Multi-layer |

| Weight Adjustment | Manual tuning | Automated gradients |

| Learning Speed | Weeks/Months | Hours/Days |

The combination of algorithmic refinement and computational power created a step change in AI development. Systems could now autonomously develop internal representations through layered function composition – a capability that underpins modern deep learning.

The Making of ImageNet and Its Impact on AI

In 2006, a bold academic project challenged conventional wisdom about machine learning’s limitations. Fei-Fei Li’s team at Princeton University began assembling what would become the world’s most extensive image collection for object recognition research. Their ambitious goal: catalogue 14 million images across 22,000 categories – a scale 700 times larger than existing datasets.

Fei-Fei Li’s Vision and Determination

Li drew inspiration from neuroscientist Irving Biederman’s theory that humans recognise 30,000 object categories. Critics dismissed the project as impractical, questioning the need for such vast data. Undeterred, Li’s team used WordNet’s hierarchy to organise categories and Amazon Mechanical Turk for labelling – completing in two years what would’ve taken decades manually.

Transforming Data into a Universal Benchmark

The initial 2009 release garnered minimal interest. Li then launched the ImageNet Large Scale Visual Recognition Challenge, reframing her collection as a competitive testing ground. The breakthrough came in 2012 when Alex Krizhevsky’s team achieved 85% top-5 accuracy using convolutional networks – outperforming traditional methods by 10 percentage points.

| Aspect | Pre-ImageNet | Post-ImageNet |

|---|---|---|

| Training Examples | Thousands | Millions |

| Object Categories | ~100 | 22,000+ |

| Model Accuracy | 75% | 95% (2023) |

This data-driven approach proved deep learning’s superiority in handling real-world complexity. By providing abundant images for training, ImageNet enabled systems to discern patterns beyond human-designed features – revolutionising computer vision across industries from healthcare to autonomous systems.

The Role of GPUs and CUDA in Neural Network Advancements

Mid-2000s computing faced a critical juncture – traditional processors struggled with complex mathematical tasks. While gaming hardware pushed graphical boundaries, few saw its potential for scientific computations. One visionary recognised this untapped power.

Jensen Huang’s Visionary Contributions

Nvidia’s CEO bet £1.2 billion on adapting graphics chips for general-purpose processing. The 2006 CUDA launch let developers harness thousands of parallel cores – a radical departure from CPU designs. Critics dismissed it as overengineering until researchers discovered its machine learning potential.

Leveraging CUDA for Accelerated Deep Learning

Geoffrey Hinton’s team demonstrated CUDA’s prowess in 2009. Training speech recognition networks took days instead of months. This breakthrough hinged on GPUs executing 5,000+ simultaneous calculations – perfect for matrix operations in machine learning.

| Aspect | CPU Performance | GPU Performance |

|---|---|---|

| Training Time | 6 Weeks | 3 Days |

| Parallel Tasks | 24 Threads | 5,120 Cores |

| Energy Efficiency | 0.4 TFLOPS/W | 14 TFLOPS/W |

Financial pressures nearly sank Nvidia during early adoption years. Their stock plummeted 70% by 2008 as CUDA gained minimal traction. Persistence paid off when deep learning’s hunger for processing power turned GPUs into essential tools.

Today, CUDA accelerates 90% of neural network training globally. This journey from gaming hardware to AI cornerstone illustrates how technological bets can reshape entire industries when vision meets practical application.

Geoffrey Hinton and the Revival of Neural Networks

During the 1970s AI winter, Geoffrey Hinton pursued an unconventional path. While most researchers abandoned biologically-inspired approaches, he championed neural networks across six institutions in 10 years. This academic odyssey – from Sussex to Toronto – laid groundwork for today’s machine learning revolution.

Breakthroughs with Backpropagation Algorithms

Hinton’s 1986 paper with Rumelhart and Williams addressed a critical challenge: how systems learn from mistakes. Their refined backpropagation method enabled multi-layer networks to adjust internal parameters systematically. This transformed training from guesswork into mathematical precision.

Early tests proved revolutionary. A network could now recognise handwritten digits after analysing 10,000 examples – a task impossible for single-layer models. The technique’s scalability became evident as computational power grew.

Influence on the Modern Machine Learning Landscape

Hinton’s Toronto lab became a talent incubator. Yann LeCun’s convolutional neural networks, developed there, processed 20% of US cheques by 2000. These practical implementations silenced critics who dismissed deep learning as theoretical.

Three lasting impacts emerged:

- Shift from rule-based to data-driven AI

- Standardisation of iterative training processes

- Cross-pollination between neuroscience and computer science

Hinton’s persistence through lean years redefined artificial intelligence’s trajectory. His work proves that foundational research, though initially overlooked, can reshape technology decades later.

How Neural Networks Learn: Process and Training

Digital cognition emerges through layered transformations of data. At its core lies an intricate dance between numerical values and adaptive connections – a process mirroring biological learning principles. Let’s dissect the machinery powering this technological marvel.

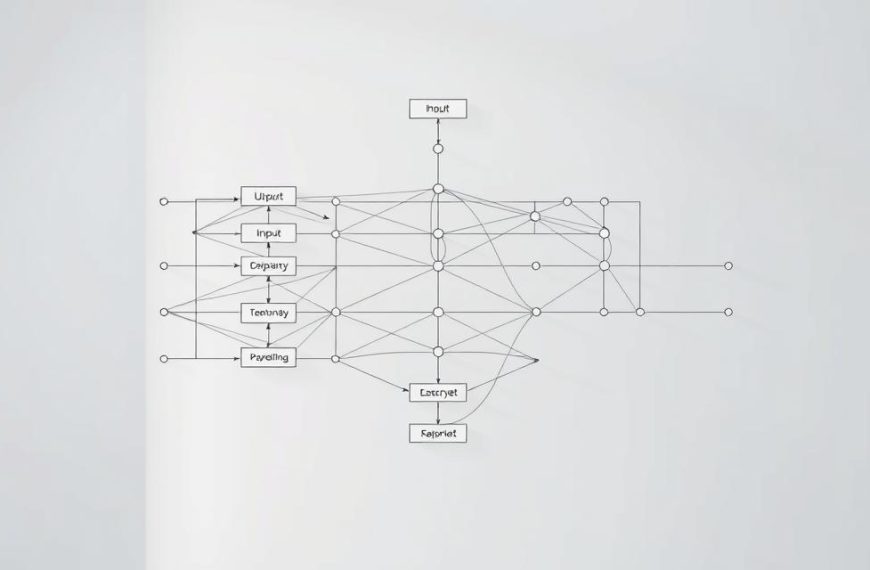

Understanding Weights, Layers, and Neurons

Each artificial neuron operates as a miniature decision-maker. It receives multiple inputs, applies adjustable weights, then calculates an output through activation functions. These components form the building blocks of machine intelligence.

Consider handwriting recognition systems. Initial training begins with random weight assignments – akin to a child’s first scribbles. Through thousands of examples, connections strengthen or weaken based on performance:

| Aspect | Initial State | Trained State |

|---|---|---|

| Weight Values | Random (-1 to 1) | Precision-tuned |

| Output Accuracy | 10% (Guesswork) | 99%+ |

| Layers Involved | Single | 15+ |

Information flows through successive tiers of neurons, each refining patterns detected by prior layers. Early tiers might recognise edges in images, while deeper ones identify complex shapes like numerals. This hierarchical processing enables sophisticated interpretation.

Modern systems employ mathematical tricks to accelerate learning. As researcher Yann LeCun observed: “Gradient descent transforms random noise into structured knowledge through relentless iteration.” Each adjustment nudges weights toward configurations that minimise errors across the entire dataset.

The journey from chaotic beginnings to precise output demonstrates machine learning’s essence. Through layered architectures and continuous training, simple functions coalesce into remarkable cognitive capabilities.

Practical Applications in Image and Speech Recognition

The late 20th century witnessed a quiet revolution in automated systems. Banking institutions became early adopters of pattern recognition technology, with Yann LeCun’s convolutional networks processing 10% of US cheques by 1995. This marked the first major commercial success for machine learning in repetitive tasks.

Transforming Industries with Deep Learning

Early systems excelled at structured tasks like handwritten digit identification. Their architecture proved ideal for analysing uniform images such as bank cheques. However, attempts to tackle complex visual recognition failed spectacularly – rotated objects or varied lighting conditions baffled 1990s models.

The ImageNet breakthrough changed everything. AlexNet’s 2012 victory demonstrated how layered architectures could interpret diverse images with human-like accuracy. This achievement catalysed adoption across sectors, from medical diagnostics to retail inventory systems.

Modern deep learning models handle end-to-end processing without manual feature engineering. Speech systems now decode regional accents, while visual algorithms track microscopic cell changes. These advances stem from combining vast examples with multi-layered learning approaches.

Today’s applications extend beyond static image analysis. Real-time video interpretation and contextual speech understanding demonstrate neural architectures’ versatility. This evolution from niche tool to industrial cornerstone reshapes how organisations approach complex tasks.

FAQ

What sparked the rapid advancement of convolutional neural networks?

Breakthroughs in backpropagation techniques, paired with access to vast datasets like ImageNet, enabled systems to process layers and weights more efficiently. Innovations in GPU-accelerated computations by firms like NVIDIA further reduced training times from months to days.

How did ImageNet revolutionise machine learning?

Spearheaded by Fei-Fei Li, ImageNet provided a universal benchmark with millions of labelled images. This standardised data collection allowed models to learn intricate patterns, elevating accuracy in tasks like object recognition.

Why did early artificial intelligence models struggle with real-world tasks?

Limited computational power and insufficient training examples hindered their ability to generalise. Early architectures lacked the depth to model complex relationships, unlike modern deep learning frameworks.

What role did CUDA play in advancing neural networks?

NVIDIA’s CUDA platform, under Jensen Huang’s leadership, allowed parallel processing on GPUs. This accelerated matrix computations critical for adjusting neurons and weights, making large-scale training feasible.

How do modern systems handle tasks like speech recognition?

Techniques such as sequence modelling and attention mechanisms enable networks to process temporal data. Combined with convolutional layers, these methods extract hierarchical features from audio or visual inputs.

What makes Geoffrey Hinton’s work pivotal to today’s AI?

Hinton’s refinements to backpropagation algorithms solved vanishing gradient issues in deep architectures. His research laid the groundwork for optimising loss functions, directly influencing tools like TensorFlow and PyTorch.

Why are industries adopting deep learning for practical applications?

From medical imaging to autonomous vehicles, neural networks automate complex decision-making. Their ability to learn from raw data reduces reliance on manual feature engineering, cutting development cycles.