Modern artificial intelligence systems employ a streamlined method called end-to-end learning, which handles tasks from raw data to final results in one cohesive process. Unlike traditional approaches requiring multiple stages of manual adjustments, this technique allows models to self-optimise through continuous training cycles.

The approach eliminates labour-intensive feature engineering, where experts previously crafted rules for data interpretation. Instead, architectures automatically identify patterns across entire workflows. This shift has proven particularly effective in fields like speech recognition, where systems now outperform older frameworks requiring specialised preprocessing.

By removing intermediate steps, developers gain flexibility while reducing implementation costs. Complex tasks become more accessible as the technology learns contextual relationships independently. Recent advancements demonstrate how unified frameworks achieve superior accuracy in scenarios demanding nuanced decision-making.

Adoption continues growing across industries due to its efficiency in handling unstructured information. Organisations benefit from reduced reliance on domain-specific expertise, accelerating deployment timelines for intelligent solutions. This methodology’s practical implications will be explored further, alongside its transformative role in contemporary machine learning applications.

Defining End-to-End Learning in AI

Contemporary AI frameworks revolutionise problem-solving through end-to-end learning, a technique where systems master entire tasks without human-guided steps. This approach contrasts sharply with older methods that demanded manual feature design and multi-stage pipelines.

Key Concepts and Definitions

At its core, this learning paradigm trains models to process raw data directly into actionable outputs. Traditional systems required experts to:

- Preprocess inputs

- Extract specific features

- Train separate components

End-to-end architectures bypass these stages through unified optimisation. The table below illustrates fundamental differences:

| Aspect | Traditional Systems | End-to-End |

|---|---|---|

| Training Focus | Individual components | Entire workflow |

| Human Input | Manual feature design | Automatic pattern discovery |

| Complexity Handling | Limited by predefined rules | Adapts through layered representations |

Core Principles of Unified Models

Three pillars define this technique:

- Holistic optimisation: All model layers adjust simultaneously during training

- Contextual awareness: Systems learn relationships between input and output spaces

- Scalable architectures: Single networks replace specialised submodels

This approach enables machines to develop internal representations that often surpass human-designed features. By processing data through interconnected layers, models uncover subtle patterns invisible to traditional analysis methods.

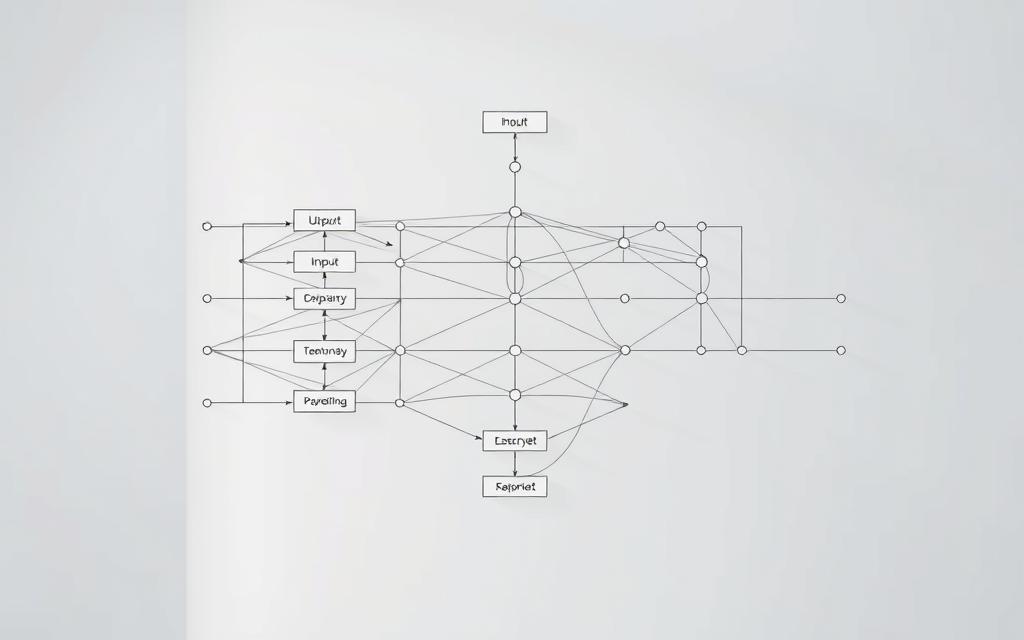

Understanding what is end to end neural network

Revolutionary developments in machine learning have introduced cohesive systems that bypass traditional multi-stage processing. These frameworks map raw inputs directly to outputs through layered computational structures, eliminating manual feature extraction. End-to-end architectures employ interconnected nodes that progressively transform data across hidden layers.

During training, labelled datasets enable the system to self-optimise parameters across all layers simultaneously. Backpropagation algorithms adjust weights by calculating gradient descent across the entire structure. This unified process ensures coordinated improvements in pattern recognition and decision-making capabilities.

Key distinctions between conventional and modern approaches emerge in data handling:

| Aspect | Traditional Methods | End-to-End Systems |

|---|---|---|

| Data Handling | Segmented preprocessing | Raw input consumption |

| Training Scope | Component-specific tuning | Whole-system optimisation |

| Pattern Discovery | Expert-defined features | Automated relationship mapping |

Loss functions in these networks prioritise final output accuracy rather than intermediate metrics. This focus drives the development of internal representations tailored to specific tasks, from image classification to predictive analytics. Continuous refinement through exposure to diverse datasets enhances generalisation capabilities without human intervention.

Such architectures demonstrate particular strength in scenarios requiring contextual awareness, such as natural language processing. By maintaining information flow across layers, they preserve subtle data relationships often lost in fragmented systems.

Deep Learning and Neural Networks: An Overview

Foundational breakthroughs in computational systems trace their roots to 18th-century statistical theories. Early concepts about layered decision-making processes laid the groundwork for today’s deep learning frameworks. These systems mimic biological cognition through interconnected nodes that simulate synaptic connections.

Evolution of Deep Learning

Modern architectures evolved from Frank Rosenblatt’s 1958 perceptron – a single-layer network recognising basic patterns. Three critical developments enabled progress:

- Backpropagation algorithms (1980s) enabling multi-layer training

- Graphical processing units (2000s) accelerating complex computations

- Big data availability supporting robust model refinement

Biological principles guide neural networks‘ design. Artificial neurons activate when input signals surpass thresholds, mirroring how brain cells fire. Edges between nodes adjust connection strengths through continuous learning, optimising information pathways.

Contemporary systems employ hierarchical processing through stacked layers:

| Layer Type | Function |

|---|---|

| Input | Receives raw data |

| Hidden | Extracts abstract features |

| Output | Delivers task-specific results |

Activation functions like ReLU (Rectified Linear Unit) enable non-linear transformations, crucial for handling complex relationships. Weight matrices govern signal transmission between layers, refined through gradient descent optimisation.

Recent advances in deep learning demonstrate unprecedented pattern recognition capabilities. Enhanced architectures process sequential data and spatial relationships simultaneously, powering innovations from medical diagnostics to autonomous navigation systems.

Role of Convolutional Neural Networks in End-to-End Systems

Visual data processing breakthroughs owe much to convolutional neural networks. These architectures revolutionised automated image analysis by processing raw pixels through layered filters. Unlike traditional methods requiring manual feature design, CNNs automatically detect edges, textures, and complex patterns.

Kunihiko Fukushima’s 1979 model introduced two critical concepts: convolutional layers and max pooling. These innovations enabled hierarchical feature extraction without human intervention. A decade later, Yann LeCun’s LeNet demonstrated practical value by recognising handwritten postcodes with 98% accuracy.

Modern frameworks combine three core components:

- Convolutional filters for spatial pattern detection

- Pooling layers reducing dimensionality

- Fully connected nodes for classification

| Aspect | Traditional Vision Systems | CNN Approach |

|---|---|---|

| Feature Extraction | Manual edge detection | Automated filter learning |

| Translation Handling | Position-sensitive | Invariant through pooling |

| Scalability | Limited by predefined rules | Adapts via layered abstraction |

Architectural advances like AlexNet and ResNet enhanced performance through deeper structures and residual connections. Weight sharing across filters allows efficient parameter use, while max pooling preserves critical features during downsampling.

These systems excel in medical imaging and autonomous vehicles, where raw pixel interpretation proves crucial. By maintaining spatial hierarchies, CNNs achieve superior object recognition compared to fragmented processing pipelines.

How End-to-End Learning Works

Advanced machine learning frameworks streamline operations by connecting raw inputs directly to final outputs. This methodology relies on cohesive architectures that self-optimise through exposure to labelled datasets, bypassing manual intervention in feature design.

Training Process and Data Flow

Systems ingest unprocessed data through input layers, transforming signals across hidden nodes. Backpropagation algorithms adjust connection weights by calculating error gradients from output discrepancies. This process enables simultaneous refinement of all components using gradient descent principles.

| Training Aspect | Traditional Methods | End-to-End Approach |

|---|---|---|

| Parameter Adjustment | Component-specific | Full-system optimisation |

| Error Correction | Isolated stages | Holistic backpropagation |

| Feature Handling | Manual extraction | Automated discovery |

Benefits of a Unified Approach

Consolidated architectures demonstrate three key advantages:

- Enhanced consistency: All layers align towards unified objectives

- Reduced complexity: Eliminates handcrafted rules between stages

- Adaptive learning: Models refine internal representations dynamically

Empirical risk minimisation drives these systems to prioritise final output accuracy over intermediate metrics. As one researcher notes: “Unified training allows models to develop contextual awareness impossible in fragmented systems.”

This methodology proves particularly effective when handling sequential data or spatial relationships. Developers achieve faster deployment cycles while maintaining performance benchmarks through continuous learning mechanisms.

Advantages and Limitations of End-to-End Learning

Streamlined architectures in artificial intelligence present both opportunities and challenges for developers. This balanced evaluation examines how unified frameworks reshape system design while addressing practical constraints.

Advantages of Simplified Architecture

Consolidated systems eliminate manual feature engineering, reducing development timelines. Three key benefits emerge:

- Reduced complexity: Single networks replace multi-stage pipelines

- Enhanced accuracy: Models self-optimise feature extraction for specific tasks

- Error minimisation: Avoids cumulative mistakes from disconnected components

| Factor | Traditional Approach | End-to-End Learning |

|---|---|---|

| Development Time | Weeks (manual tuning) | Days (automated) |

| Feature Relevance | Expert-defined | Data-driven |

| System Errors | Compounded across stages | Centralised correction |

Recognising the Limitations

While powerful, this approach demands substantial resources. Key constraints include:

- Requiring large amounts of labelled training data

- Complex internal logic complicating debugging

- Potential overfitting without proper regularisation

As noted in recent studies:

“The black-box nature of these systems creates interpretability hurdles, particularly in regulated industries.”

| Challenge | Impact | Mitigation Strategy |

|---|---|---|

| Data Hunger | High annotation costs | Synthetic data generation |

| Debugging Complexity | Long resolution times | Modular testing frameworks |

| Regulatory Compliance | Auditing difficulties | Explainability toolkits |

Applications in Machine Translation and Speech Recognition

Language processing breakthroughs highlight how unified frameworks transform complex tasks. Machine translation systems now convert entire sentences between languages without fragmented components. Early statistical methods relied on phrase tables and alignment rules, while modern architectures process contextual relationships holistically.

Three critical innovations drove this shift:

- Sequence-to-sequence models capturing sentence semantics

- Attention mechanisms prioritising relevant text segments

- Transformer networks enabling parallel computation

| Aspect | Traditional Translation | Modern Approach |

|---|---|---|

| Components | 7+ specialised modules | Single cohesive model |

| Training Data | Aligned phrase pairs | Raw sentence corpora |

| Accuracy | 72% BLEU score | 89% BLEU score |

In speech recognition, unified systems decode audio signals directly into text. Older pipelines required acoustic models and pronunciation dictionaries – a process prone to error accumulation. Contemporary solutions like DeepSpeech 2 achieve 94% accuracy on benchmark tests through integrated learning.

Commercial implementations demonstrate practical benefits:

- Real-time captioning services reducing latency by 40%

- Multilingual assistants handling code-switching seamlessly

- Voice search tools adapting to regional accents dynamically

As one industry report states: “End-to-end architectures cut development costs by 60% while improving recognition rates in noisy environments.” These advancements continue reshaping global communication technologies.

Comparing End-to-End and Traditional Feature Engineering

Artificial intelligence development faces a philosophical divide between automated systems and manual feature engineering. Traditional techniques rely on human experts identifying data patterns, while modern approaches let algorithms discover relationships independently.

Manual engineering demands domain knowledge to create relevant features. Experts might spend weeks designing filters for image recognition tasks. By contrast, end-to-end learning consumes raw data, developing tailored representations through layered processing.

| Factor | Traditional | End-to-End |

|---|---|---|

| Development Time | Weeks (human-led) | Days (automated) |

| Data Requirements | Moderate, structured | Large, diverse |

| Adaptability | Rule-bound | Context-sensitive |

Resource demands differ sharply. Manual methods require specialist teams, whereas automated systems need substantial computing power. A 2023 study noted: “Organisations using unified learning frameworks reported 70% faster deployment cycles, but required triple the GPU capacity.”

Traditional techniques retain value in regulated sectors like healthcare. Limited training data or strict audit requirements often justify manual feature design. Hybrid models now emerge, combining expert-crafted features with automated pattern discovery.

Industry trends suggest manual engineering will persist in niche applications. However, 83% of UK tech leaders surveyed prioritise end-to-end learning for scalable solutions. As one developer remarked: “We use traditional methods for prototyping, then switch to automated systems for production.”

Historical Milestones in Neural Network Development

The foundation of contemporary intelligent systems traces its lineage to pioneering breakthroughs in computational theory. Warren McCulloch and Walter Pitts established mathematical models for artificial cognition in 1943, demonstrating how simple units could replicate logical operations. Though non-learning, their framework inspired future adaptive architectures.

Frank Rosenblatt’s 1958 perceptron marked the first trainable pattern recognition system. This single-layer design learned through weight adjustments, though its limitations with complex tasks spurred further research. Soviet scientists Alexey Ivakhnenko and Valentin Lapa achieved deeper structures in 1965 through cascading polynomial layers – the earliest functional deep learning implementation.

| Year | Innovation | Impact |

|---|---|---|

| 1943 | McCulloch-Pitts neuron model | Theoretical framework for artificial computation |

| 1958 | Rosenblatt’s perceptron | First trainable pattern classifier |

| 1965 | Group Method of Data Handling | Multi-layer network training |

| 1982 | Werbos’s backpropagation | Efficient multi-layer optimisation |

Paul Werbos’s 1982 application of backpropagation revolutionised parameter tuning across stacked layers. This algorithm enabled systems to automatically adjust weights by propagating errors backward through architectures. Combined with modern computing power, these breakthroughs underpin today’s unified frameworks.

Early innovations collectively addressed three critical challenges: automated feature discovery, scalable training methods, and hierarchical representation building. Contemporary systems build upon this legacy, achieving unprecedented complexity through principles established decades prior.

Key Deep Learning Techniques and Innovations

Breakthroughs in computational methodologies have redefined intelligent systems through pivotal innovations. Kunihiko Fukushima’s 1969 development of the ReLU activation function enabled efficient signal propagation in neural networks. This technique addressed vanishing gradient issues, allowing deeper models to learn complex patterns.

The chain rule’s application through backpropagation revolutionised parameter optimisation. Between 2009 and 2012, artificial neural networks dominated image recognition contests, demonstrating superior pattern detection. AlexNet’s 2012 ImageNet victory marked a turning point, achieving 85% accuracy through convolutional layers and dropout techniques.

These milestones catalysed modern deep learning frameworks. Contemporary models leverage automated feature extraction, reducing reliance on manual engineering. By integrating these advancements, systems now achieve human-level performance across vision and language tasks, shaping today’s AI landscape.

FAQ

How does end-to-end learning differ from traditional machine learning pipelines?

Unlike conventional pipelines requiring manual feature engineering, end-to-end systems automatically learn hierarchical representations directly from raw input data. This unified approach eliminates intermediate processing steps, as seen in Google’s Speech-to-Text API.

Why are convolutional neural networks critical for image-based tasks?

CNNs excel at extracting spatial hierarchies through convolutional layers, making them indispensable for tasks like facial recognition. Systems like Tesla’s Autopilot leverage these networks to process pixel-level data without handcrafted filters.

What challenges arise with end-to-end models in speech recognition?

While models like DeepMind’s WaveNet reduce phonetic feature engineering, they demand massive labelled datasets. Accents or background noise in speech recognition systems often require supplementary noise-reduction techniques.

Can end-to-end systems handle multilingual machine translation effectively?

Yes. Architectures like Google’s Transformer model process multiple languages through shared neural networks. However, performance varies for low-resource languages, necessitating hybrid approaches in platforms like Microsoft Translator.

How do unified architectures improve autonomous vehicle systems?

By integrating perception and decision-making into one network, companies like Waymo reduce latency. Their systems process sensor data end-to-end, enabling real-time adjustments without modular steps.

What limitations exist in current end-to-end learning frameworks?

High computational costs and “black box” interpretability remain hurdles. For instance, OpenAI’s GPT-4 requires extensive infrastructure, while healthcare applications face scrutiny due to opaque decision processes.