Many professionals in artificial intelligence grapple with a persistent question: do these two technologies serve identical purposes? The confusion often stems from their overlapping use in modern systems. Let’s cut through the noise.

Reinforcement learning focuses on training agents to make decisions through trial and error. These agents interact with environments, receiving rewards or penalties based on actions. It’s akin to teaching a child through consequences rather than direct instruction.

By contrast, neural networks mimic biological brain structures to process data. They excel at identifying patterns in complex datasets, whether recognising faces or predicting stock trends. Layers of interconnected nodes handle this computational heavy lifting.

While both concepts fall under machine learning, their applications differ fundamentally. One prioritises behavioural strategies; the other specialises in data interpretation. Professionals often combine them – using neural architectures to enhance decision-making algorithms.

Understanding this distinction matters for developing effective AI solutions. Misapplication could lead to inefficient systems or missed opportunities. As industries from healthcare to finance adopt these tools, clarity becomes non-negotiable.

Understanding Reinforcement Learning

At the core of adaptive artificial intelligence lies a decision-making framework that prioritises long-term outcomes. This approach combines goal-oriented systems with feedback mechanisms to shape behaviours through continuous environmental interaction.

Key Characteristics and Principles

Agents develop strategies by balancing immediate gains with future rewards. Trial-and-error methods allow systems to explore actions while measuring consequences via penalties or incentives. Mathematical models like Markov Decision Processes map how choices affect subsequent states, creating dynamic pathways for optimisation.

Real-world Applications in Gaming, Healthcare and Finance

In gaming, algorithms master complex challenges by simulating countless scenarios—AlphaGo’s historic victory demonstrates this capability. Healthcare teams employ these systems to personalise therapies, analysing patient histories to recommend effective treatments.

Drug discovery processes benefit from accelerated pattern recognition, reducing development timelines significantly. Financial institutions leverage autonomous agents for portfolio management, adapting strategies to fluctuating market conditions to maximise returns. Each application relies on iterative feedback loops, proving the versatility of this machine learning paradigm.

Exploring Neural Networks

Modern computational systems draw inspiration from biological processes to tackle complex tasks. These frameworks process raw data through interconnected nodes, transforming inputs into actionable insights across industries.

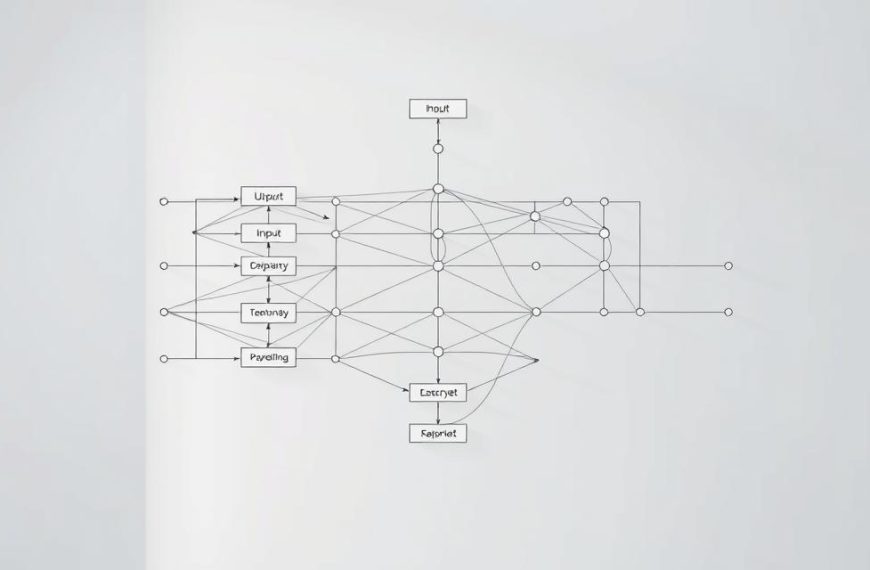

Layered Architecture and Component Roles

Three primary tiers define these systems. Input layers receive numerical or categorical data, while hidden layers apply mathematical operations using adjustable parameters called weights and biases. The final tier delivers predictions or classifications.

Activation functions like ReLU or sigmoid introduce non-linear relationships. This enables pattern recognition beyond basic linear models. Each artificial neuron calculates weighted sums before passing results to subsequent layers.

Training Methods and Backpropagation Techniques

Systems refine accuracy by adjusting connection strengths. Backpropagation algorithms compare predictions against actual outcomes, calculating error gradients. These values determine parameter updates across networks.

Supervised training relies on labelled datasets to guide adjustments. Multiple epochs gradually minimise discrepancies, enhancing performance. This iterative approach allows frameworks to handle diverse tasks – from image analysis to financial forecasting.

is reinforcement learning neural network: Debunking the Myths

A persistent confusion in AI circles conflates two distinct concepts. While both advance machine learning capabilities, their purposes and architectures diverge significantly. Clarifying this distinction prevents costly misapplications in real-world systems.

Interdependencies Between RL and NN

These technologies often collaborate rather than compete. Learning neural networks might approximate value functions for agents navigating dynamic environments – think game-playing algorithms predicting optimal moves. However, simpler frameworks like decision trees frequently handle basic complex tasks in reinforcement contexts.

Neural architectures shine in supervised scenarios without any reinforcement components. Facial recognition systems, for instance, rely solely on layered data processing. This separation proves their functional independence despite occasional partnerships.

Common Misconceptions Explained

Three myths dominate discussions:

- Myth 1: Reinforcement systems require neural components (many use basic models)

- Myth 2: Both technologies prioritise pattern recognition (only neural frameworks do)

- Myth 3: They’re interchangeable tools (each solves unique problem types)

The critical difference lies in their objectives. One cultivates strategic decision-making through environmental feedback; the other extracts insights from static datasets. Recognising this prevents misguided implementations across healthcare diagnostics or financial forecasting.

Algorithm Comparisons: RL versus NN

Decoding algorithmic approaches reveals stark contrasts in how systems acquire knowledge. While both methods process information to make predictions, their paths diverge at foundational levels.

Trial-and-Error Learning versus Deterministic Pattern Recognition

One framework thrives on environmental interaction. Agents experiment with actions, receiving sparse feedback through rewards or penalties over extended periods. This approach resembles teaching someone chess through gameplay rather than rulebooks.

Contrastingly, deterministic systems excel at digesting structured data. Supervised learning methods adjust parameters using immediate error signals from labelled examples. Unsupervised variants uncover hidden relationships in raw datasets without predefined targets.

Three critical distinctions emerge:

- Learning type: Exploration-driven strategies versus example-based pattern detection

- Feedback timing: Delayed gratification versus instant error correction

- Complexity: Streamlined decision models versus multi-layered architectures

These differences dictate practical applications. Strategic domains like robotics favour the former’s adaptability, while data-rich fields like medical imaging benefit from the latter’s precision. Choosing the right machine approach hinges on understanding these algorithmic fingerprints.

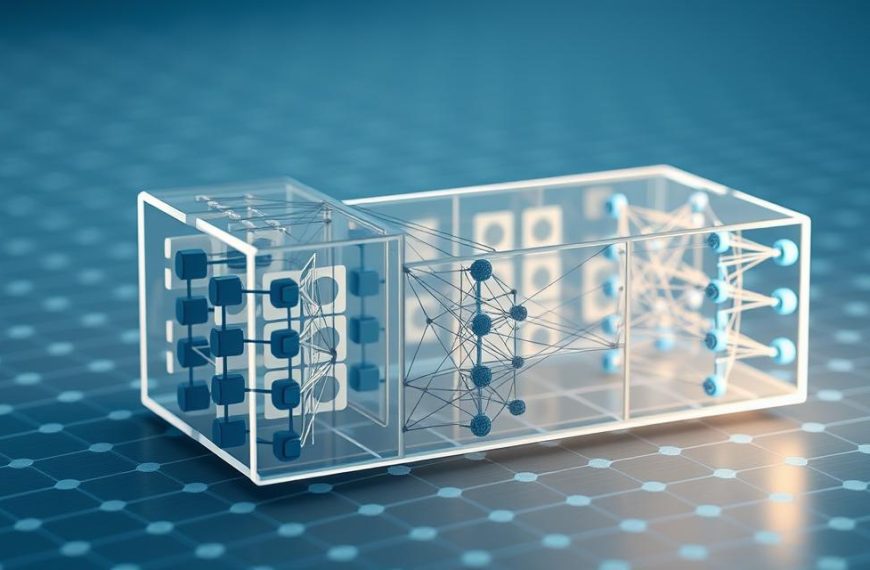

Integrating RL and NN: How They Complement Each Other

Strategic integration unlocks new frontiers in artificial intelligence. Combining decision-making frameworks with pattern recognition creates systems that adapt and predict with unprecedented accuracy. This synergy solves problems traditional methods find intractable.

Using Neural Networks as Function Approximators

Complex environments demand advanced solutions. Traditional tabular methods buckle under vast state spaces, making neural networks indispensable for approximating value functions. Deep Q-Networks (DQN) exemplify this approach, handling high-dimensional data like pixel inputs in gaming environments.

| Aspect | Traditional RL | NN-Enhanced RL |

|---|---|---|

| State Space Handling | Limited to discrete states | Processes continuous inputs |

| Data Efficiency | Requires manual feature engineering | Learns representations automatically |

| Application Scope | Simple grid worlds | Robotic control, real-world simulations |

Optimisation techniques like experience replay prevent catastrophic forgetting. Target networks stabilise training by decoupling prediction and evaluation steps. These innovations enable deep learning architectures to approximate policies in dynamic scenarios.

AlphaGo’s triumph showcases this integration’s power. Its combination of Monte Carlo tree search with neural networks analysed 30 million board positions. Future systems could revolutionise logistics and personalised medicine through combined pattern recognition and strategic planning.

Broader Perspectives on Machine Learning Approaches

The evolution of intelligent systems reveals layered approaches to computational problem-solving. At the foundation lies artificial intelligence, with machine learning serving as its dynamic subset. These frameworks prioritise adaptive algorithms over rigid programming rules.

Supervised and Unsupervised Learning Methods

Labelled datasets drive supervised techniques. Models predict outcomes by analysing historical examples – think spam filters identifying unwanted emails. Accuracy improves through iterative adjustments guided by known results.

Unsupervised methods uncover hidden relationships in raw data. Clustering algorithms group customers by purchasing habits without predefined categories. Dimensionality reduction simplifies complex datasets, revealing essential patterns for decision-making.

Deep Learning: Expanding the Possibilities

Multi-layered architectures redefine what machine learning can achieve. Unlike traditional models requiring manual feature engineering, these systems automatically extract meaningful representations from inputs.

Three transformative impacts stand out:

- Image recognition achieving human-level accuracy

- Natural language processing understanding context nuances

- Predictive analytics handling high-dimensional data streams

Scalability remains a key advantage. Performance improves as datasets grow, making deep learning indispensable for modern applications like medical diagnostics and autonomous vehicles. Hybrid systems now merge these approaches, creating adaptable solutions for real-world challenges.

Industry Applications and Future Trends

Cutting-edge technologies are reshaping industries through practical implementations. From diagnosing illnesses to navigating urban streets, these systems demonstrate remarkable versatility. Over a third of global enterprises now deploy such tools, with adoption rates climbing steadily.

Sector-specific Examples and Case Studies

Healthcare teams utilise convolutional architectures to analyse X-rays, spotting tumours with 94% accuracy in recent trials. Autonomous vehicles combine computer vision with decision-making frameworks, processing live video feeds to avoid collisions. Financial institutions prevent £2.3bn annually in fraud through pattern detection in transaction data.

Voice assistants showcase natural language capabilities, handling 5 billion monthly queries worldwide. Social platforms employ facial recognition to tag 100 million images daily. These examples highlight how tailored solutions address sector-specific challenges.

The Evolving Landscape of AI and Machine Learning

Generative models now create synthetic data for training medical diagnosis systems. Quantum computing experiments suggest 200x speed improvements for processing complex algorithms. Hybrid approaches merge predictive analytics with real-time adaptation, particularly in logistics optimisation.

Emerging trends focus on reducing error margins in critical applications. Foundation models enable cross-industry knowledge transfer, while edge computing brings processing power to devices like self-driving cars. As 42% of firms explore adoption strategies, these advancements promise transformative impacts across global markets.

Conclusion

In the rapidly evolving landscape of artificial intelligence, distinguishing core methodologies remains paramount for innovation. While machine learning encompasses both strategic decision-making and data interpretation, their operational frameworks differ fundamentally.

Reinforcement learning thrives on continuous environmental interaction, using delayed feedback to refine actions. Neural networks, conversely, excel at extracting patterns from static datasets through layered architectures. These distinct approaches demand tailored implementation strategies across industries.

Modern solutions often combine both models, leveraging their complementary strengths. Autonomous systems might use one algorithm for real-time adjustments and another for predictive analytics. Such integrations demonstrate how specialised tools enhance performance.

Understanding these differences enables professionals to deploy machine learning technologies effectively. Whether optimising supply chains or personalising healthcare, choosing the right approach prevents costly mismatches. Clarity in application ensures organisations harness AI’s transformative potential responsibly.