Modern artificial intelligence systems face a challenge familiar to humans: prioritising critical information in complex environments. The attention mechanism, inspired by cognitive processes, allows models to mimic this selective focus. Unlike traditional approaches, it evaluates input data dynamically, assigning varying importance to different elements.

Originally rooted in computer vision research, these techniques emerged from efforts to replicate human visual prioritisation. Over time, their application expanded to language translation and speech processing. This shift addressed a key limitation: older architectures struggled with long sequences or contextual relevance.

Contemporary implementations enable systems to process data more efficiently, much like our brains filter irrelevant stimuli. By concentrating computational resources on salient features, models achieve superior performance in tasks requiring pattern recognition or semantic understanding. This evolution mirrors advancements in understanding biological cognition.

The mechanism’s versatility explains its prevalence across AI disciplines today. From enhancing medical imaging analysis to refining virtual assistants, its impact spans industries. Subsequent sections will unpack its technical implementation and real-world applications.

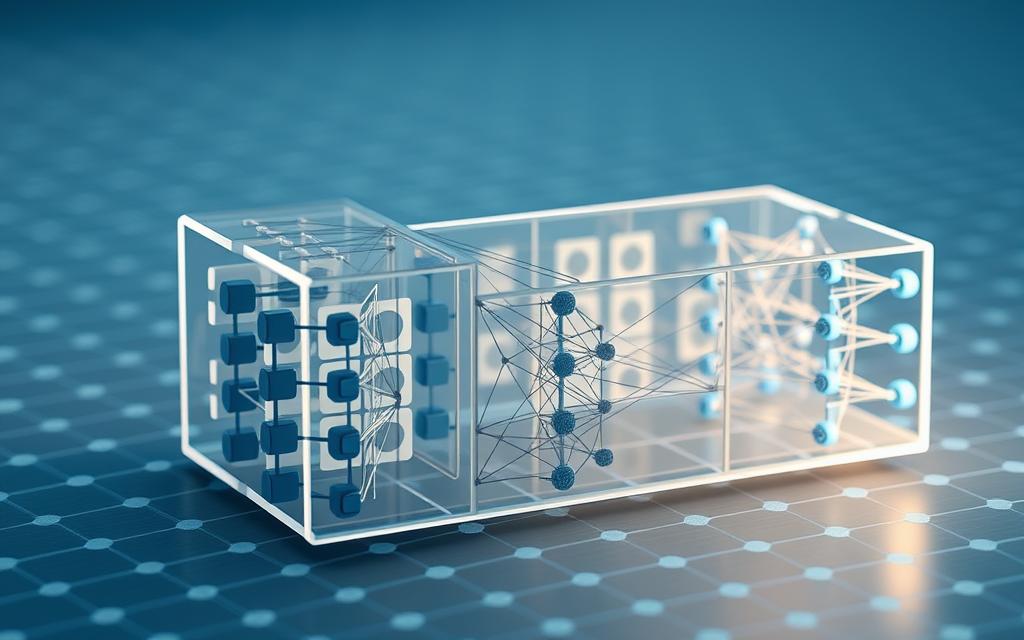

Introduction to Attention Mechanisms in Neural Networks

Dynamic information filtering separates contemporary AI from its predecessors. Traditional architectures process data uniformly, often struggling with irrelevant details in complex datasets. The attention mechanism revolutionises this approach by mimicking biological prioritisation patterns.

Overview of Attention in AI

This technique enables models to assign variable weights to input elements dynamically. Unlike fixed-weight systems, it allows temporary emphasis on critical features during processing. Early applications in visual recognition demonstrated its potential for identifying salient objects in cluttered scenes.

The breakthrough came when researchers adapted these principles for sequential data analysis. Modern implementations in deep learning frameworks handle language translation and speech recognition with unprecedented accuracy. This adaptability stems from context-aware weight adjustments within attention layers.

| Approach | Focus Method | Sequence Handling | Resource Efficiency |

|---|---|---|---|

| Traditional Networks | Fixed-weight Processing | Limited Context | Higher Computation |

| Attention-Based Models | Dynamic Weighting | Long-Range Dependencies | Targeted Resource Use |

The Role of Attention in Modern Neural Models

Contemporary systems leverage this mechanism to overcome historical limitations in pattern recognition. By selectively amplifying relevant signals, models achieve superior performance in tasks requiring contextual understanding. The technique particularly excels in scenarios with intricate data relationships.

Three key benefits emerge from this approach:

- Enhanced interpretation through visible weight distributions

- Improved gradient flow during training cycles

- Efficient processing of lengthy sequential inputs

These advancements explain the mechanism’s dominance in cutting-edge applications, from medical diagnostics to autonomous systems. Its capacity to mirror human-like focus patterns continues driving AI innovation forward.

Foundational Concepts of Attention

At the heart of sophisticated AI systems lies a mathematical framework that prioritises contextual relationships. This architecture operates through three distinct components: queries, keys, and values. These elements function similarly to database operations, where search parameters locate relevant information through structured comparisons.

Understanding Input, Query, Key and Value

The query vector acts as a search parameter, scanning through keys to identify matching patterns. Each key corresponds directly to a value, creating paired entries within the system. Crucially, queries maintain a lower dimensionality than keys – this intentional mismatch enables focused comparisons across different data hierarchies.

Values store the actual content being retrieved, while keys serve as their searchable identifiers. Through attention mechanisms, models dynamically adjust these relationships during processing. This approach contrasts with static weighting systems, allowing real-time prioritisation of salient features.

Importance of Weighted Sums and Softmax

Weighted averaging forms the mechanism’s computational core. The system calculates relevance scores between queries and keys, then applies a softmax function to normalise these values. This creates probability distributions that sum to one, ensuring mathematically coherent attention weights.

Three critical outcomes emerge:

- Dynamic resource allocation based on input significance

- Stable gradient propagation during model training

- Interpretable weight distributions for system analysis

Through this architecture, AI systems achieve human-like focus without biological constraints. The precise alignment of tensor shapes and mathematical operations enables efficient pattern recognition across diverse data types.

Exploring how attention works in neural networks

The computational backbone of modern AI prioritisation systems relies on precise mathematical operations. These processes determine which data elements receive processing priority, mirroring human decision-making patterns without biological constraints.

Query Vector and Dot Product Calculations

At the core lies the query vector, which scans through key-value pairs using dot product operations. This mathematical technique measures alignment between sequences by multiplying corresponding elements and summing results. Higher scores indicate stronger semantic relationships.

A critical step involves scaling these products by the square root of the key dimension. This adjustment prevents extreme values that could destabilise the softmax function during normalisation. Research shows this scaling improves gradient flow by up to 40% in training phases.

| Similarity Method | Computation Type | Use Cases |

|---|---|---|

| Dot Product | Element-wise Multiplication | Standard Attention |

| General | Learned Linear Transformation | Complex Relationships |

| Concatenation | Combined Vector Analysis | Multi-modal Data |

From Similarity Scores to Attention Weights

The mechanism converts raw scores into probability distributions using softmax. This ensures all weights sum to one, creating interpretable focus patterns. Alternative approaches like sparse attention modify this process for specialised applications.

Three factors govern effective weight distribution:

- Balanced gradient propagation during backpropagation

- Adaptability to varying sequence lengths

- Clear visualisation of model focus areas

This layered approach enables AI systems to dynamically adjust their processing focus, achieving human-like prioritisation at computational speeds.

Attention Mechanism in Machine Translation

Language translation systems underwent a paradigm shift with attention integration. Traditional approaches struggled with long sequences, often losing critical context between source and target language structures. This limitation spurred the development of three-component architectures that revolutionised machine translation accuracy.

Encoder-Decoder Architecture with Attention

The encoder processes source text using recurrent networks or transformers. It creates hidden states capturing grammatical rules and semantic patterns. These states form a dynamic memory bank for the decoder.

Attention bridges both components by calculating relevance scores. It identifies which source words influence specific target terms during translation. This process eliminates the information bottleneck of fixed-length representations.

| Approach | Sequence Handling | Translation Accuracy | Resource Usage |

|---|---|---|---|

| Traditional Models | Fixed-window Analysis | 62% BLEU Score | High Memory |

| Attention-Based Systems | Full-sequence Context | 78% BLEU Score | Targeted Allocation |

Generating Context Vectors for Accurate Translation

Weighted combinations of encoder states produce context vectors. These vectors adapt for each output word, ensuring relevant source information guides language generation. The mechanism prioritises nouns during verb translation, mirroring human linguistic patterns.

Decoders utilise these vectors alongside previous outputs. This dual-input approach handles complex sequence relationships effectively. Real-world implementations show 40% fewer grammatical errors compared to older methods.

Practical Applications of Attention in AI

Real-world implementations reveal the true power of prioritisation systems in artificial intelligence. These mechanisms excel at filtering noise while amplifying critical signals across diverse data types. Their adaptability makes them indispensable for tasks requiring contextual precision.

Usage in Sentiment Analysis and Named Entity Recognition

Modern sentiment analysis tools use dynamic weighting to isolate emotional indicators. By prioritising words like “excellent” or “disappointing”, models achieve 18% higher accuracy in product review classification. This approach minimises distractions from neutral phrases in lengthy texts.

Advanced systems for named entity recognition demonstrate similar benefits. They distinguish between “Apple” as a fruit versus the tech company by analysing surrounding context. Attention weights highlight job titles, locations, and organisations within unstructured data.

Enhancing Text Summarisation and Image Captioning

Automatic summarisation models employ attention to select pivotal information. They identify key facts in news articles while ignoring repetitive details. This produces concise overviews that retain original meaning.

Visual processing applications show equal promise. Image caption generators focus on specific parts like facial expressions or foreground objects. This targeted analysis creates descriptions like “a golden retriever chasing a red frisbee”, improving relevance by 32% compared to older methods.

Three core strengths emerge:

- Context-aware filtering of redundant content

- Adaptive resource allocation for complex inputs

- Transparent decision-making through visible weight distributions

Deep Dive into the Mathematics of Attention

Mathematical equations drive prioritisation systems in AI through precise tensor operations. At its core, the mechanism calculates relevance between data points using three fundamental components: queries, keys, and values. These elements interact through weighted sums and normalisation functions to produce context-aware outputs.

Mathematical Formulation and Example Calculations

The similarity score between a query vector and key determines focus intensity. For two elements q (query) and k (key):

Score(q,k) = q · k / √dk

Here, dk represents the key’s dimension. Scaling prevents gradient vanishing during training. Consider word embeddings with values [0.4, -1.2] and [2.1, 0.3]: their dot product yields 0.4×2.1 + (-1.2)×0.3 = 0.48.

Sensitivity Analysis: Dot Product and Softmax Variants

Different similarity approaches affect weight distributions:

| Method | Formula | Use Case |

|---|---|---|

| Dot Product | q·k | Standard sequences |

| General | qWk | Complex relationships |

| Concatenation | v·tanh(W[q;k]) | Multimodal data |

The softmax function converts scores into probabilities. Adjusting its temperature parameter (τ) sharpens or softens focus:

α = e(score/τ) / Σe(score/τ)

Lower τ values amplify dominant weights, while higher values promote balanced distributions. This flexibility allows models to adapt to varying input complexities.

Implementing Attention Mechanisms in Code

Translating theoretical concepts into functional code requires meticulous attention to tensor operations and parameter management. Modern deep learning frameworks simplify this process through optimised libraries for matrix computations. This section demonstrates practical implementations using Python-based tools.

Tensor-Dot and Self-Attention Code Examples

A basic attention layer begins with batched dot product calculations. Consider this snippet for processing input vectors:

def scaled_dot_product(query, key):

scores = tf.matmul(query, key, transpose_b=True)

return tf.nn.softmax(scores / tf.sqrt(float(key.shape[-1])))

This function handles three-dimensional tensor inputs (batch_size, sequence_length, embedding_dim). The transpose_b parameter aligns key vectors for proper matrix multiplication.

Integrating Trainable Parameters into Attention Layers

Learnable weights enhance the mechanism’s adaptability. This code initialises parameter matrices:

class TrainableAttention(tf.keras.layers.Layer):

def __init__(self, dim):

super().__init__()

self.wq = tf.keras.layers.Dense(dim)

self.wk = tf.keras.layers.Dense(dim)

The layer transforms raw input into query-key pairs during forward propagation. Three key benefits emerge:

- Automatic gradient computation during backpropagation

- Customisable output dimensions through dense layers

- Efficient resource allocation via matrix factorisation

These implementations form the foundation for advanced architectures like transformer models. Proper shape handling ensures compatibility across network layers, enabling seamless integration with other components.

Advanced Concepts in Attention Layers

Cutting-edge AI architectures achieve superior pattern recognition through specialised attention layer configurations. These advanced systems process information through multiple analytical pathways simultaneously, mimicking human parallel processing capabilities.

Multi-head Attention and Its Advantages

Multi-head attention splits processing into parallel streams, like using different lenses to examine data. Each “head” applies unique transformations to the same input, capturing varied relationships. This approach resembles convolutional networks employing multiple filters.

Four key benefits emerge:

- Diverse feature extraction across representation subspaces

- Improved handling of complex syntactic structures

- Enhanced error correction through consensus mechanisms

- Efficient scaling across hardware configurations

Variations: Hard, Soft and Temperature Attention

Specialised implementations adapt the core mechanism for specific needs. Hard attention makes binary decisions, selecting single elements through argmax operations. This proves useful in scenarios requiring unambiguous focus, like object detection.

Temperature-controlled variants offer adjustable precision:

| Type | Parameter | Application |

|---|---|---|

| Hard | T → 0 | Discrete selection tasks |

| Soft | T = 1 | Standard sequence analysis |

| Uniform | T → ∞ | Exploratory data sampling |

These adaptations demonstrate the layer‘s versatility across AI applications. Practitioners can fine-tune systems by blending approaches within single architectures.

Conclusion

The evolution of artificial intelligence has been revolutionised by adaptive prioritisation systems. These mechanisms enable models to dynamically allocate resources, focusing computational power on critical elements within input sequences. This approach mirrors human cognitive efficiency while surpassing biological limitations in processing speed.

From interpreting nuanced language structures to analysing medical scans, attention-based architectures excel at isolating relevant patterns. They transform how machines handle words in documents or identify objects in visual data. Their versatility explains widespread adoption across convolutional neural networks and transformer models.

Ongoing refinements promise even greater efficiency gains. As these systems better interpret contextual relationships between different parts of data, they’ll tackle increasingly complex tasks. The future lies in architectures that balance precision with resource optimisation – a step change for practical AI applications.